Five Things to Pay Attention to in a New Poll

You’ve just read in the news: “77% of surveyed registered voters said that ‘Reducing the influence of special interests and corruption in Washington’ is either the most important or a very important issue facing the country.”

We often see the media citing statistics on how the public feels about major issues. Where do they get these statistics from, and how do we know they are true?

The answer is, of course, polls, and it’s complicated. Polling can help us gauge how a particular audience feels on any number of issues, and is often used to evaluate public opinion. Understanding public opinion is instrumental in guiding our messaging and advocacy on the issues we care about.

When assessing public opinion, we need to know which factors to pay attention to in any new poll we come across. Sometimes even news articles interpret polls inaccurately, and it is up to us to spot when this is the case!

Here are five major things to pay attention to when looking at a new poll:

1. Sample size

The sample size is the number of people polled. The bigger the sample size, the better! Sample sizes of over 1000 are best for ensuring the polling results are representative of the population you’re trying to understand, and also to compare subgroups of that population (like different racial categories or partisan groups). Under 700 is too small to compare subgroups (except maybe gender or party). If the sample size is too small, the poll will not be reliable.

For more information on sampling, see Pew Research Center: Methods 101 video on Sampling and Weighting

2. Margin of Error (MOE)

Margin of Error tells you how much a polling result may differ from the “true” percentage of the population. The larger the margin of error, the less certain you can be that your results are a close match to the real answer. Margin of error decreases as sample size increases. So if you have a small sample size, you have a large margin of error, making your polling results less reliable than a large sample with a small MOE.

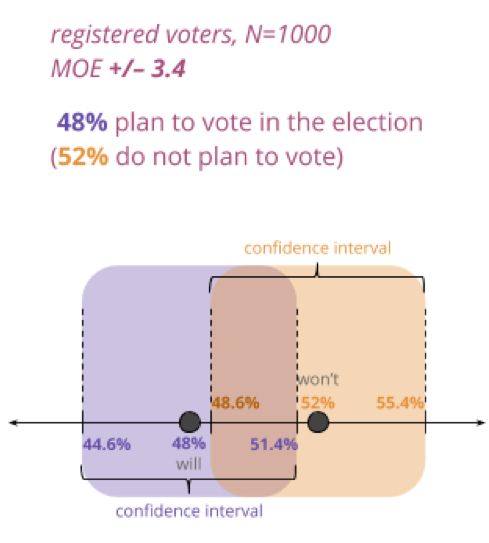

In a poll, if there is any overlap in MOE ranges of two responses to the same question (e.g., a poll with an MOE of +/-3.4% finds that 48% plan to vote and 52% do not), the two findings are not statistically different. We would call that “within the margin of error” or say that it’s about 50-50.

3. Mode

The way a poll is physically conducted—by phone or online, usually. Phone polls with live callers (still the “gold standard”) are great for representativeness but sometimes respondents may not be fully honest when speaking to a live caller. Internet polls allow for more honest responses but live callers cannot clarify questions and answers. It is crucial that phone polls include cell phones.

For more information, see Pew Research Center videos on Random Sampling (via phone polling) and Nonprobability Surveys (via online polling)

4. Single topic vs. Omnibus

A single topic poll only covers one topic, and provides more in-depth exploration and chance for experimenting, message testing, etc.

An omnibus includes many different topics, with one to three questions on each. They are the most common type of poll for news organizations and major pollsters. They often have larger sample sizes and get more top-of-mind reactions.

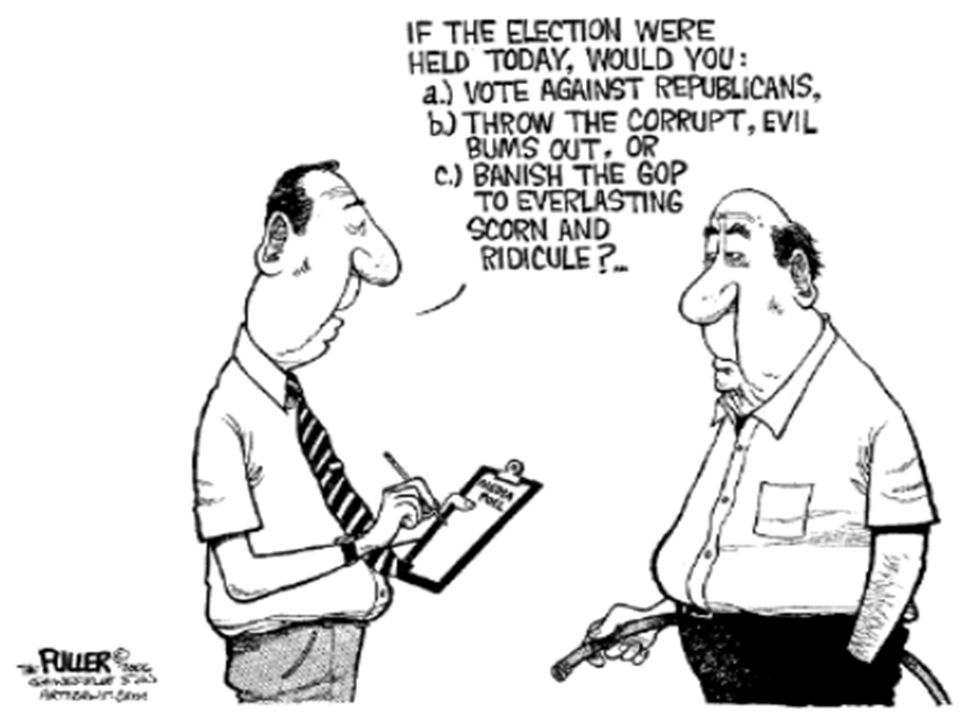

5. Question wording

How you ask makes a difference. If a question is worded in a suggestive or biased way, it will bias the results. It is important to look at the polling question to see if it is sound before citing polling results. Are respondents being pushed toward a certain answer? Does the question include all reasonable answer options? You are an expert in your field, so if a question sounds funky, it probably is!

For more information, see Pew Research Center: Methods 101 video on Question Wording

No poll is perfect. Any one poll is never enough to tell us with certainty what the public thinks about a particular issue. Polling is an art as much as it is a science, and there’s a wide range of methodological diversity. Given this, in addition to looking at a poll’s anatomy you can also assess if a poll is trustworthy by:

Checking other polls

The best way to assess public opinion is to look at several polls conducted with different samples, modes, etc., and average their findings. An average of several polls with different methodologies (given that they are sound) is always more likely to be accurate than a single poll.

Checking the pollster’s rating

FiveThirtyEight provides a rigorous rating of pollsters’ accuracy and methodology. To check out a pollster’s reliability, go to https://projects.fivethirtyeight.com/pollster-ratings/

Here are some common polling sites that track public opinion on major issues. Many news articles that cite polls will also link the to polling results, where you can look at the polling questions, results, and crosstabs (the breakdown of results based on different demographic and other characteristics):